Data Engineering: from raw data to valuable insights

Tag(s)

What does a data engineer do – and what does it get you?

A data engineer is the architect of the data structure within an organisation. He or she ensures that data is properly collected, stored and made accessible for analysis. Sounds technical? It is, but the result is crystal clear: grip on your data, insights that drive results, and a foundation to work in a truly data-driven way.

At Blenddata, we believe that any company can get value out of its data provided it has the right infrastructure and knowledge. And that’s where data engineering comes in.

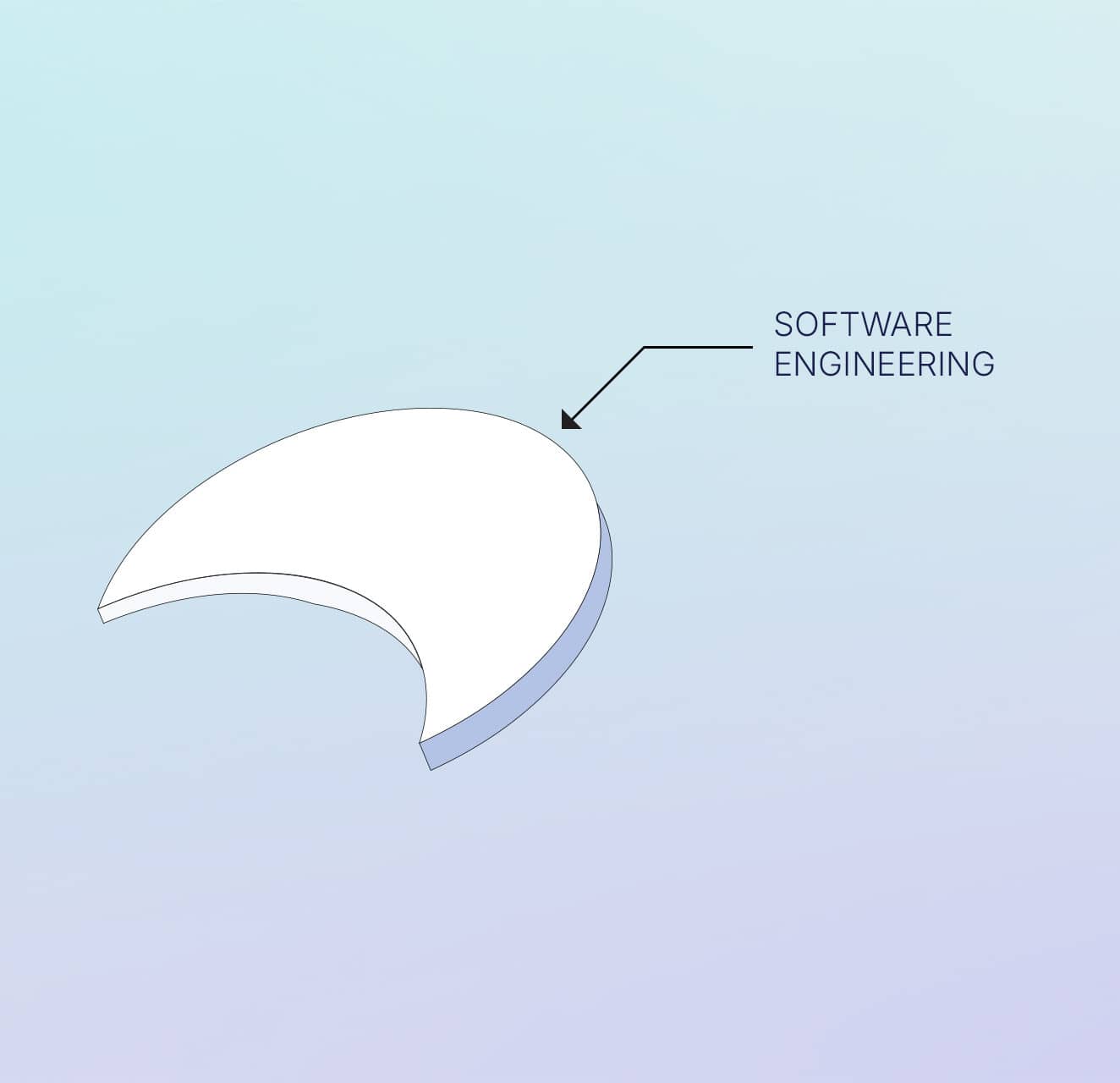

But data engineering does not stand alone. An important foundation under any good data platform is software engineering.